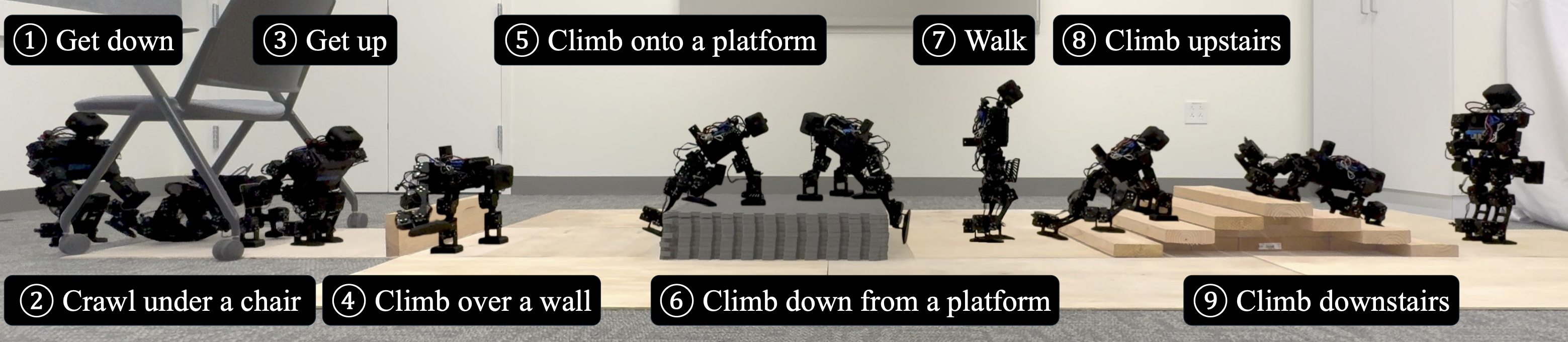

Locomotion Beyond Feet enables whole-body humanoid locomotion on diverse and challenging terrains—including low-clearance spaces, knee-high walls and platforms, and steep stairs—through chaining nine distinct locomotion skills.

Abstract

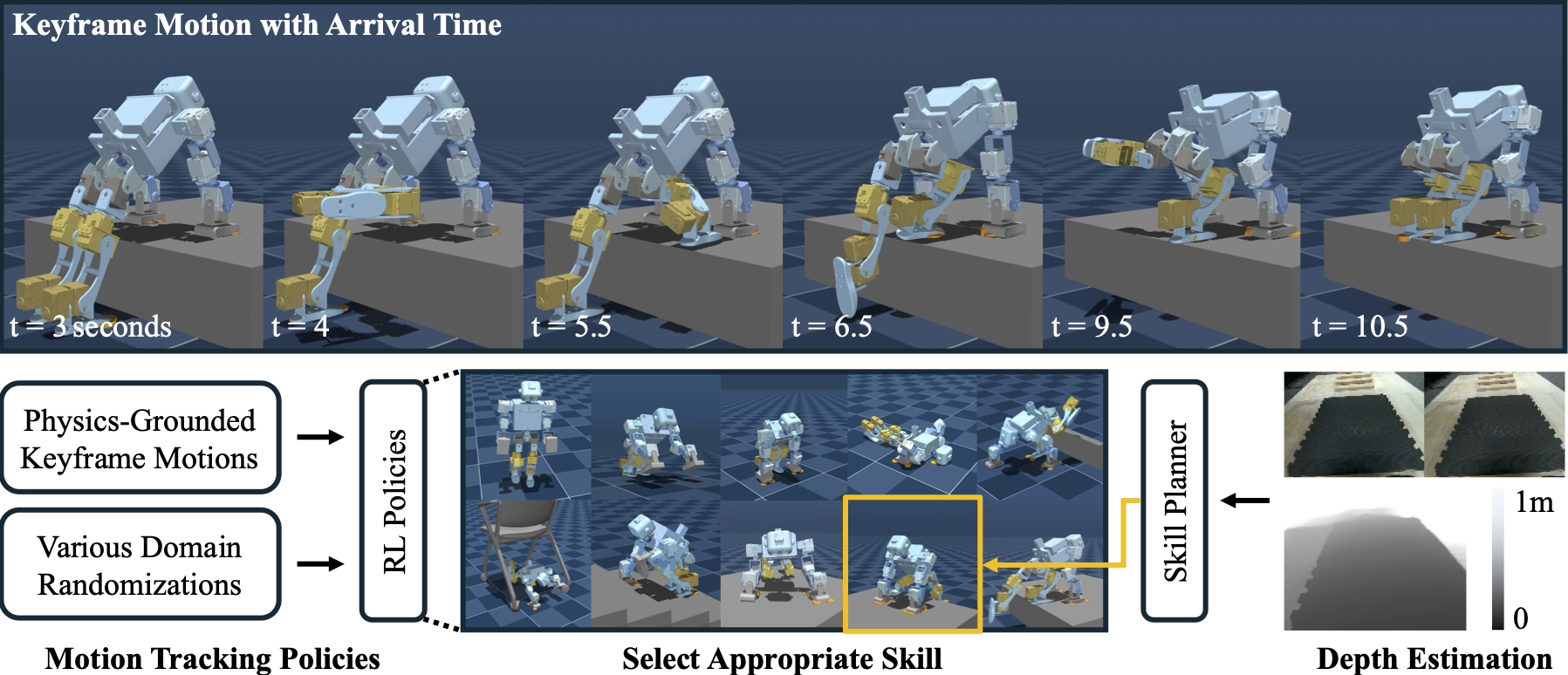

Most locomotion methods for humanoid robots focus on leg-based gaits, yet natural bipeds frequently rely on hands, knees, and elbows to establish additional contacts for stability and support in complex environments. This paper introduces Locomotion Beyond Feet, a comprehensive system for whole-body humanoid locomotion across extremely challenging terrains, including low-clearance spaces under chairs, knee-high walls, knee-high platforms, and steep ascending and descending stairs. Our approach addresses two key challenges: contact-rich motion planning and generalization across diverse terrains. To this end, we combine physics-grounded keyframe animation with reinforcement learning. Keyframes encode human knowledge of motor skills, are embodiment-specific, and can be readily validated in simulation or on hardware, while reinforcement learning transforms these references into robust, physically accurate motions. We further employ a hierarchical framework consisting of terrain-specific motion-tracking policies, failure recovery mechanisms, and a vision-based skill planner. Real-world experiments demonstrate that Locomotion Beyond Feet achieves robust whole-body locomotion and generalizes across obstacle sizes, obstacle instances, and terrain sequences. All work will be open-sourced.

Different Obstacle Courses

Keyframe Editing Tool

Through keyframe editing with our open-source, intuitive tool, we can easily compose complex motion sequences, such as getting off a cart.

Robustness

Method

Our system consists of three main stages. (1) We generate physics-grounded keyframe motions using a physics-aware GUI application, where robot poses and timing are specified interactively. (2) We interpolate these keyframes to create reference motions that serve as tracking rewards for reinforcement learning policies. We apply extensive domain randomization—including variations in initial robot states, obstacle dimensions, and IMU noise—to ensure robust sim-to-real transfer. (3) A learned skill planner processes depth input from a depth estimation module at 3.1 Hz, combined with IMU readings and the current skill state, to select the appropriate next skill.

BibTeX

@misc{yang2026locomotion,

title = {Locomotion {{Beyond Feet}}},

author = {Yang, Tae Hoon and Shi, Haochen and Hu, Jiacheng and Zhang, Zhicong and Jiang, Daniel and Wang, Weizhuo and He, Yao and Wu, Zhen and Chen, Yuming and Hou, Yifan and Kennedy, Monroe and Song, Shuran and Liu, C. Karen},

year = 2026,

month = jan,

number = {arXiv:2601.03607},

eprint = {2601.03607},

primaryclass = {cs},

publisher = {arXiv},

doi = {10.48550/arXiv.2601.03607},

urldate = {2026-01-08},

archiveprefix = {arXiv},

keywords = {Computer Science - Robotics}

}Acknowledgment

The authors would like to express their great gratitude to the helpful discussions from all members of TML and REALab. This work was supported in part by the NSF Award #2143601, #2037101, #2132519, #2153854, Sloan Fellowship, and Stanford Institute for Human-Centered AI. The views and conclusions contained herein are those of the authors and should not be interpreted as necessarily representing the official policies, either expressed or implied, of the sponsors.